Introduction:

Imagine a future where Artificial Intelligence (AI) is woven into every aspect of our lives – from the smart assistants in our homes to the technology that shapes how we work, shop, and connect with others.

The EU Artificial Intelligence Act (AI Act) is a comprehensive regulatory framework introduced by the European Union to govern the development and use of artificial intelligence (AI) within its member states. Its primary goals are to ensure that AI systems are safe, respect fundamental rights, and are used in a way that fosters trust and innovation.

Key Dates

- EU AI Act was published in the EU Official Journal on July 12, 2024

- Entered into force on August 1, 2024

- Will be effective from August 2, 2026, except for the specific provisions listed in

Article 113.

This Regulation shall enter into force on the twentieth day following that of its publication in the Official Journal of the European Union.

It shall apply from 2 August 2026.

However:

(a) Chapters I and II shall apply from 2 February 2025

(b) Chapter III Section 4, Chapter V, Chapter VII, and Chapter XII and Article 78 shall apply from 2 August 2025, with the exception of Article 101

(c) Article 6(1) and the corresponding obligations in this Regulation shall apply from 2 August 2027.

Definitions of AI

AI is defined in the EU AI Act using the following terms:

- AI system: A machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.

- General-purpose AI model: An AI model, including where such an AI model is trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications, except AI models that are used for research, development or prototyping activities before they are placed on the market.

- General-purpose AI system: An AI system which is based on a general-purpose AI model and which has the capability to serve a variety of purposes, both for direct use as well as for integration in other AI systems.

Purpose and Rationale Behind the EUAI Act

The EU Artificial Intelligence Act (AI Act) was introduced to address the growing impact of AI technologies on society and ensure that their development and use are safe, ethical, and respect fundamental rights. The purpose of the AI Act is to:

As AI technologies become more integrated into critical areas like healthcare, transportation, and public safety, ensuring that these systems are reliable, robust, and do not pose undue risks is crucial.

Users and affected individuals need to understand how AI systems make decisions and be able to hold entities accountable for the outcomes of these systems.

AI systems can inadvertently introduce or amplify biases based on the data they are trained on, leading to discriminatory outcomes. Addressing these issues is essential for ensuring fairness and equality.

AI systems often process large amounts of personal data, which raises concerns about how data is collected, used, and protected. Ensuring compliance with data protection laws like the GDPR is a key aspect of the Act.

Striking a balance between promoting innovation and enforcing regulations helps maintain the EU’s competitive edge in AI technology while protecting public interests.

A unified approach helps prevent regulatory fragmentation among member states and provides a coherent set of rules for AI development and deployment.

As AI technology advances, it’s important to ensure that its deployment respects human rights and ethical norms, avoiding applications that could be harmful or unethical.

Risk categorization in EUAI Act

- Unacceptable Risk

AI systems that pose a threat to safety, fundamental rights, or ethical standards and are deemed too dangerous to be allowed within the EU.

Examples:- Social Scoring Systems: AI systems used for evaluating and scoring individuals based on their behaviour or social status.

- Manipulative Techniques: AI systems designed to manipulate people, such as those used in social manipulation or psychological coercion.

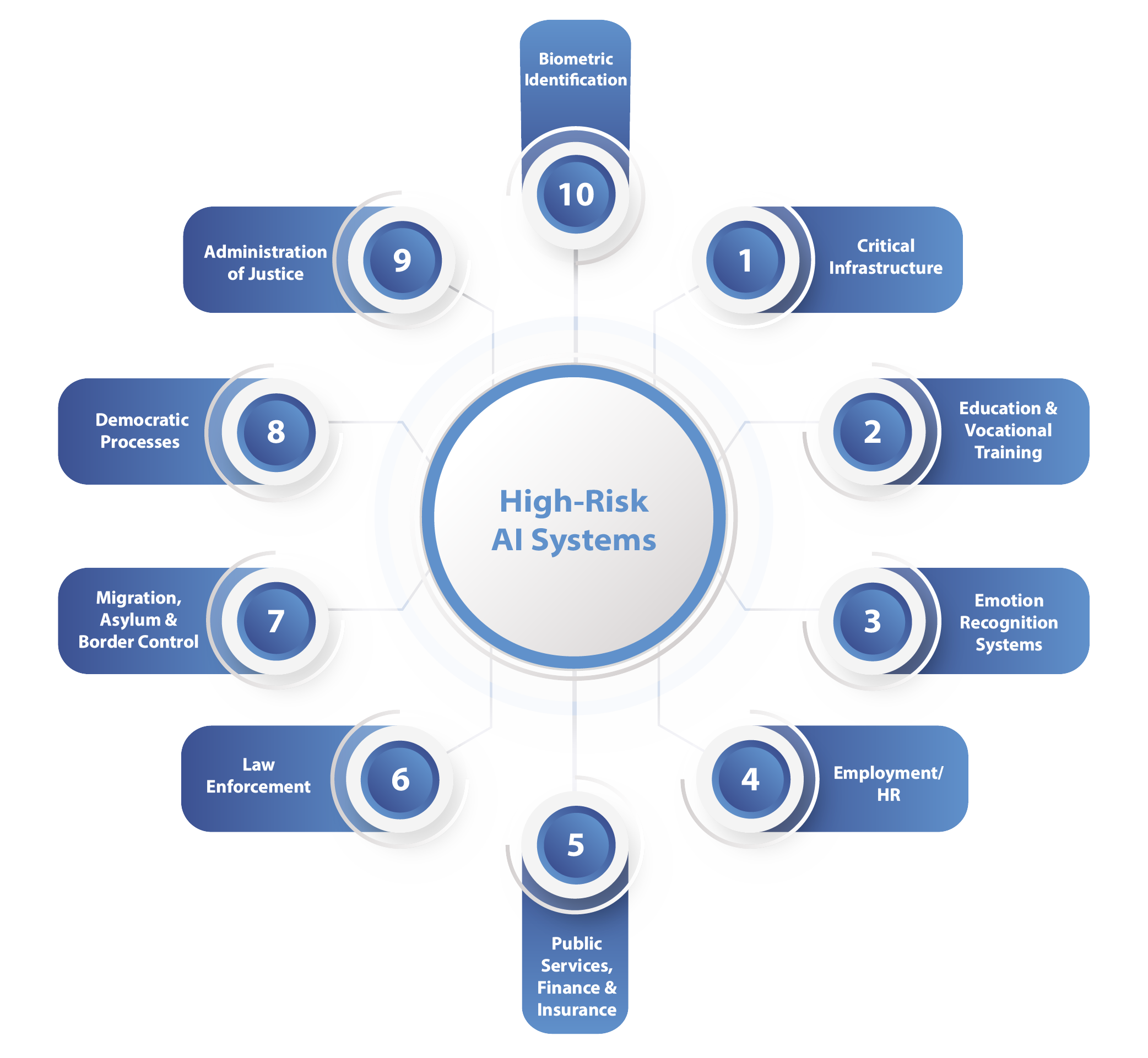

- High Risk

High-Risk systems are permitted but must comply with multiple requirements and undergo a conformity assessment. This assessment needs to be completed before the system is released on the market. - Limited Risk

AI systems that have a moderate impact on individuals and society, requiring specific transparency measures but less stringent regulation compared to high-risk systems.

Examples:- Chatbots: AI systems used for customer service that interact with users but have limited impact on critical areas.

- Moderate Content Moderation: AI systems used for content moderation on social media platforms.

- Minimal Risk

AI systems that pose minimal or negligible risk to individuals and society, including most AI applications used in everyday life.

Examples:- Recommendation Systems: AI systems used for recommending products or services based on user preferences.

- Virtual Assistants: Basic virtual assistants that perform routine tasks.

Impact on Stakeholders

-

Provider :Article 3(3):

‘Provider’ means a natural or legal person, public authority, agency or other body that develops an AI system or a general-purpose AI model or that has an AI system or a general-purpose AI model developed and places it on the market or puts the AI system into service under its own name or trademark, whether for payment or free of charge.

Providers must ensure their AI systems comply with the Act’s requirements, including risk assessments, documentation, and transparency measures. They are responsible for ensuring that their AI systems meet the applicable standards and regulations before placing them on the market or putting them into operation. -

Deployer :Article 3(4):

‘Deployer’ means a natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity.

Deployers must ensure that the AI systems they use comply with the regulations, particularly for high-risk AI systems. This includes adhering to requirements for monitoring and maintaining the AI system’s performance and safety. They are also responsible for providing necessary documentation and information to ensure that the AI system operates within the legal framework. -

Importer :Article 3(6):

‘Importer’ means a natural or legal person located or established in the Union that places on the market an AI system that bears the name or trademark of a natural or legal person established in a third country.

Importers must ensure that AI systems they bring into the EU comply with EU regulations. They are responsible for verifying that the AI systems meet all relevant requirements and standards and must work with providers and other stakeholders to ensure compliance with the Act. -

Distributor :Article 3(7):

‘Distributor’ means a natural or legal person in the supply chain, other than the provider or the importer, that makes an AI system available on the Union market.

Distributors must ensure that the AI systems they distribute are compliant with the Act. They have obligations to verify that the products are correctly labelled and accompanied by the required documentation. Distributors must also assist in monitoring the performance and safety of the AI systems they distribute and report any issues. - Affected Person:

This term generally refers to individuals or entities impacted by AI systems, including consumers, workers, or other stakeholders who interact with or are influenced by the AI systems.

Affected persons have rights and protections under the Act, including the right to be informed about the use of AI systems and their impact. They can also raise concerns or complaints regarding the deployment and use of AI systems, ensuring that their rights and interests are safeguarded.

Consequence of Non-Compliance

| Description | Amount |

|---|---|

| Non-compliance with Prohibition of AI Practices (Article 5) | Up to €35 Million or up to 7% of total worldwide annual turnover, whichever is higher. |

Non-compliance with any of the following provisions –

|

Up to €15 Million or up to 3% of total worldwide annual turnover, whichever is higher. |

| Incorrect, incomplete, or misleading information to notified bodies or national competent authorities | Up to €7.5 Million or up to 1% of total worldwide annual turnover, whichever is higher. |

| For SMEs, including start-ups | Penalties will be limited to the lower of the percentage of total worldwide annual turnover or the fixed monetary amounts specified above. |

| European Data Protection Supervisor may impose administrative fines on Union institutions, bodies, offices and agencies falling within the scope of this Regulation. Fines for Union Institutions, Bodies, Offices and Agencies are: | |

| Non-compliance with Prohibition of AI Practices (Article 5) | €1.5 million |

| Any other non-compliance | €750,000 |

Regulators and their Functions

- European Commission

Proposes and oversees the implementation of the AI Act. Provides guidance, technical support, and tools for establishing and operating AI regulatory sandboxes.

Functions: Coordinates with national authorities, maintains a single information platform for stakeholder interaction, and monitors compliance across the EU. - National Competent Authorities

Member States are required to establish competent authorities responsible for enforcing the AI Act at the national level.

Functions:

Provide guidance and advice on the implementation of this Regulation, in particular to SMEs including start-ups, taking into account the guidance and advice of the Board and the Commission. - European Data Protection Supervisor (EDPS)

Responsible for overseeing data protection aspects related to AI systems, particularly those involving Union institutions, bodies, offices, and agencies.

Functions: Establish an AI regulatory sandbox for Union institutions and ensure compliance with data protection laws. - European Artificial Intelligence Board

Facilitates cooperation and consistency in the implementation of the AI Act across Member States.

Functions: Provides advice and recommendations on the Act’s application, supports coordination among national competent authorities, and helps ensure uniform enforcement. - European AI Office

Plays a key role in the implementation of the AI Act, especially in relation to general-purpose AI models. It will also work to foster research and innovation in trustworthy AI and position the EU as a leader in international discussions.

Functions: Enables the future development, deployment and use of AI in a way that fosters societal and economic benefits and innovation, while mitigating risks.

Conclusion

As artificial intelligence rapidly reshapes our world, the EU AI Act plays a crucial role in guiding this technological evolution. By establishing a clear regulatory framework, the Act strikes a balance between encouraging innovation and ensuring that ethical and legal standards are met.

Imagine a self-driving car navigating busy city streets: the EU AI Act ensures that it does so safely, transparently, and without compromising your privacy. Picture a medical AI system diagnosing diseases, the Act guarantees it does so fairly and without bias. In every application, from smart assistants to automated decision-making, the Act aims to protect our rights while fostering a thriving, responsible AI ecosystem.

In essence, the EU AI Act is not just about rules and penalties, it’s about creating a future where technology enhances our lives while upholding the values we hold dear. By championing transparency, accountability, and innovation, the Act paves the way for a digital landscape where cutting-edge AI contributes positively to society, ensuring that progress never comes at the expense of our fundamental rights and safety.

Disclaimer

The information provided in this article is intended for general informational purposes only and should not be construed as legal advice. The content of this article is not intended to create and receipt of it does not constitute any relationship. Readers should not act upon this information without seeking professional legal counsel.