Introduction

Artificial intelligence (AI) is spearheading the Fourth Industrial Revolution, redefining the capabilities within various industries from technology to healthcare, and significantly boosting productivity. However, this rapid advancement brings with it a multitude of ethical and regulatory challenges. Incidents such as the creation and dissemination of deepfake videos and other misuses of AI technology have highlighted the urgent need for comprehensive regulatory frameworks. This article delves into the global landscape of AI regulation, exploring how different countries are navigating these uncharted waters.

The Need for AI Regulation

AI technologies, while beneficial, can pose significant risks if not properly managed. Recent studies, including one from MIT, suggest that even minimal use of AI can increase worker productivity by up to 14%. However, alongside these benefits come challenges related to privacy, security, and ethical operations. The misuse of AI, such as the production of deepfake content, has raised alarms worldwide, highlighting the technology’s potential to undermine social, political, and personal security.

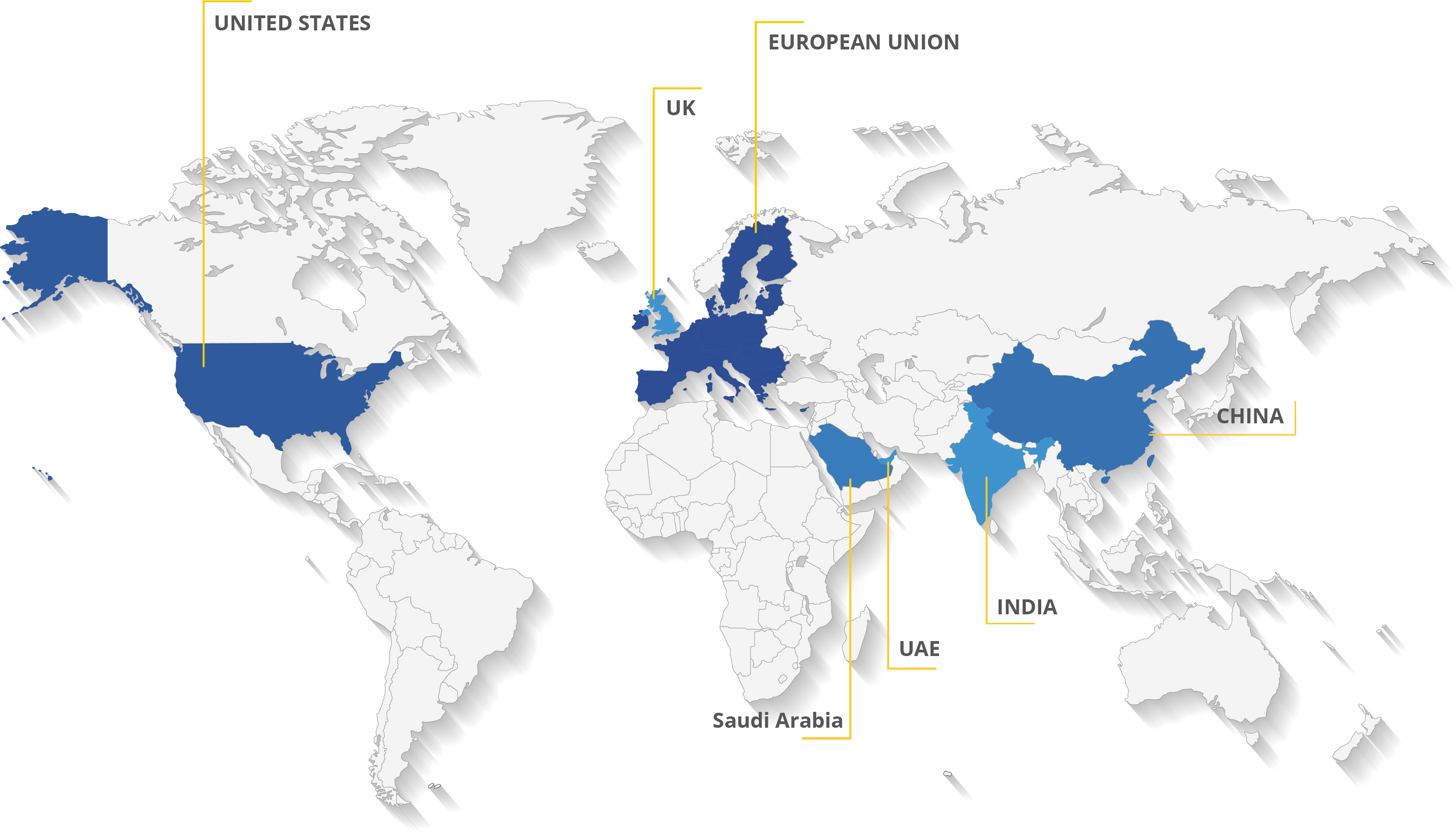

Global Responses to AI Regulation

Recognizing the dual aspects of AI, countries around the globe have begun to establish legal frameworks to manage the rise of intelligent systems. In 2022 alone, 37 AI-related bills were passed worldwide, reflecting a proactive legislative approach to this emerging technology. Below, we explore how various regions are addressing AI regulation

The European Union: Setting a Benchmark with the AI Act

The EU has been at the forefront of AI regulation, proposing the AI Act which categorizes AI systems according to their risk levels. This act is pioneering in its comprehensive approach, aiming to protect citizen's rights while fostering innovation. It outlines stringent requirements for 'high-risk' AI systems, including transparency and data accuracy obligations, and establishes clear boundaries for unacceptable practices.

United States: A Fragmented Approach

The U.S. lacks a unified federal framework for AI regulation, with individual states such as New York and Illinois introducing their specific legislations. For example, Illinois' Artificial Intelligence Video Interview Act mandates that employers disclose the use of AI in analyzing video interviews. At the federal level, proposals like the National AI Commission Act seek to establish a commission to review and recommend AI policies.

China: Strict Controls and Ambitious Plans

China’s approach to AI regulation focuses on security and control, with strict regulations against deepfake technologies and the misuse of AI in content creation. The Chinese government requires all AI-generated content to be clearly labeled, maintaining a tight grip on AI applications to ensure they align with national security and public interest.

Australia and Canada: Developing Ethical Frameworks

Both countries are currently enhancing their regulatory landscapes. Australia’s AI ethics framework guides the responsible development and deployment of AI technologies. Meanwhile, Canada's proposed Artificial Intelligence and Data Act aims to manage the risks associated with AI systems, emphasizing consumer protection and transparency.

Application of AI in Different Regions of the World:

| AI Application Type | Jurisdiction | Compliance Requirements |

|---|---|---|

| Healthcare Diagnostics | EU | GDPR: Strong data protection and patient privacy requirements |

| Autonomous Vehicles | USA, California | FMVSS, California DMV Regulations, AV START Act (proposed) |

| Personalized Advertising | EU | GDPR: Consent for personal data processing |

| Chatbots | USA, California | CCPA: Consumer privacy rights to know and delete personal data |

| Facial Recognition Systems | EU, UK | GDPR, UK Data Protection Act: Strict use and data storage limits |

| Fraud Detection Systems | USA, EU | Sarbanes-Oxley Act, GDPR: Data accuracy and access controls |

| Recommendation Systems | EU | GDPR: Transparency in data usage, right to explanation |

| HR Analytics | USA, California | CCPA, EEOC regulations: Non-discrimination and privacy |

| Industrial Automation | Germany | Industries 4.0 standards, Machine Safety Regulations |

| Smart Home Devices | USA, EU | FCC Rules, GDPR: Data security and consumer consent |

This table provides a concise overview of how different AI applications are categorized in terms of risk and what regulatory frameworks apply to them in various key jurisdictions. The use of such a table in a document or presentation would help stakeholders understand the necessary compliance measures and adjust their AI strategies accordingly

| Year | Country | Legal Document or Regulation | Main Focus of Regulation | Reason for Regulation |

|---|---|---|---|---|

| 2018 | European Union | General Data Protection Regulation (GDPR) | Data protection and privacy of EU citizens | To address the risks associated with the processing and use of personal data by AI, including generative AI, enhancing user rights and control over personal data. |

| 2020 | China | Regulations on the Management of Deep Synthesis Technologies | Control and oversight of AI-generated content and deepfakes | To prevent the misuse of AI in creating misleading or harmful content, ensuring content authenticity and security. |

| 2021 | United States | AI Initiative Act (Federal level, proposed) | Promotion and regulation of AI technology across all sectors | To establish a national strategy and standards for AI development, ensuring ethical use and mitigating risks of AI technologies including generative AI. |

| 2022 | United Kingdom | Proposal for a specific AI regulation as part of the broader digital strategy | Specific regulation of AI within the scope of digital data and technology | To specifically address emerging AI technologies and their implications on privacy, security, and societal norms, catalyzing safe AI innovation. |

| 2023 | European Union | AI Act (Proposal stage, specifically addressing risks of generative AI) | Classification of AI systems by risk, specific rules for high-risk AI | To create a harmonized regulatory framework for AI systems, particularly generative AI, ensuring safety, transparency, and accountability in their application. |

| 2023 | India | A proposed Digital India Act is set to replace the IT Act of 2000 and oversee high-risk AI systems. The Indian government emphasizes the need for a strong, citizen-centric, and inclusive environment promoting "AI for all." To address ethical, legal, and societal concerns regarding AI, a task force has been formed. Additionally, plans include establishing an AI regulatory authority. India aims to become an "AI garage" for emerging and developing economies, facilitating the creation and implementation of scalable solutions for global deployment, as outlined in its National Strategy for AI. | Modernize regulations and specifically regulate high-risk AI systems, replacing the outdated IT Act of 2000. | To Ensure Modernization of regulations according to present scenarios and development. |

| 2020 | Saudi Arabia | Saudi Arabia has crafted a National Strategy on Data and AI aimed at fostering a welcoming, adaptable, and secure regulatory environment. This includes incentive programs designed to draw AI companies, investors, and skilled individuals. The strategy outlines Saudi Arabia's ambition to emerge as a prominent player in utilizing and exporting data and AI technologies by 2030. It plans to capitalize on its youthful and dynamic population along with its distinctive centralized ecosystem. To attract foreign investment, the country intends to host international AI gatherings and utilize its position as a technology hub in the Middle East. | The Principles have a very broad scope and apply to AI stakeholders who are designing, Devloping, deploying, implementing, using or being affected by AI systems within Saudi Arabia, including individuals and legal entities, in both the public and private sectors. | The strategy outlines Saudi Arabia's ambition to emerge as a prominent player in utilizing and exporting data and AI technologies. |

| 2023 | UAE |

In 2017, the UAE became the first country to establish an AI ministry and following resources have been issued for guidance:

|

To prioritize its focus in the current main industries such as logistics, energy, and tourism. It will also focus on the increasingly important industries that are healthcare and cybersecurity. | To ensure legal requirements and ethics are embedded in the AI tools and systems ‘by design’. |

Each entry in the table reflects the strategic objectives of different jurisdictions in managing the rapid development and deployment of AI technologies, particularly generative AI. The reasons for these regulations highlight a common theme of enhancing security, protecting personal data, and ensuring ethical use of AI technologies, adapted to the specific needs and concerns of each region.

Nations with Highest Investment in Artificial Intelligence:

United States and China had the greatest number of cross-country collaborations in AI publications from 2010 to 2021, the number of AI research collaborations between the United States and China increased roughly 4 times since 2010, and was 2.5 times greater than the collaboration totals of the next nearest country pair, the United Kingdom and China, below are the list if nations with the most investment in AI research and technology as of 2024 ranging from the US to Canada:

- United States

According to Macro Polo, about 60% of "top tier" AI researchers are employed by US institutions and businesses, and Mirae Assets estimates that $249 billion in private financing has been raised to date for AI research, making the US the most productive nation in the field. Some of the most well-known suppliers in the market, such as OpenAI, Google, Meta, and Anthropic, are based in Silicon Valley alone. These companies have contributed to some of the industry's top products, such as GPT-4, DALL E-3, Gemini, Llama 2, and Claude 3. With 100 million weekly active users, GPT-4 is without a doubt the golden goose of the AI race at this point in its development.

Government of United States is also actively investing in AI research and development, spending USD 3.3 billion on the technology in 2022. - China

China is the second largest donor to AI research, with 11% of the world's best AI researchers (Macro Polo), 232 AI-related projects in 2023, and $95 billion in private investment raised between 2022 and 2023 (Mirae Assets). With new releases like Tencent's Hunyuan large language model (LLM), a Chinese counterpart to ChatGPT, Huawei's Pangu LLM with 1.085 trillion parameters, and Baidu's Ernie AI model, which the company claims offers capabilities on par with GPT-4, these companies are leading the nation in AI innovation.

The Chinese government is also making significant investments in the AI arms race; according to IDC, China's spending will amount to $38.1 billion by 2027, or 9% of global investment. - United Kingdom

The UK has continued to be a major player in the AI race for many years. In reality, with a current worth of $21 billion, which the International Trade Administration (ITA) projects will reach $1 trillion by 2035, the UK is the third-largest AI market in the world behind the US and China.

There are several AI startups in the nation, such as Darktrace, which leverages AI to give businesses the capacity to identify cloud-based dangers instantly, and DeepMind, the top AI development lab behind AlphaGo and AlphaFold.

Prime Minister Rishi Sunak is reportedly planning to increase taxpayer spending on chips and supercomputers to £400 million, including a £100 million investment in a supercomputer facility located in Bristol in partnership with Hewlett Packard Enterprise and Bristol University. Government spending on research and development is also rising. At Davos in January, UK leaders shared their views on the future of AI, recognizing its potential but calling for international regulations. - Israel

The Israeli IT industry has emerged as a leader in artificial intelligence development, with $11 billion in private investment made between 2013 and 2022 (Mirae Asset), the fourth-highest amount globally.

As of 2023, 144 generative AI-related firms were operating in the nation, and $2.3 billion had been invested in the space, according to Ctech. Additionally, plans to contribute $8 million to boost the creation of AI apps in Hebrew and Arabic have been made public by the Israeli government.

Several well-known AI-driven businesses are based in the area, such as SentinelOne, an enterprise security AI provider, AI21 Labs, the maker of Wordtune, an AI-driven cybersecurity platform, and Deep Instinct. - Canada

The Canada has funded $2.57 billion in AI research between 2022 and 2023, bringing the total amount invested in AI to $8.64 billion.

Additionally, the Canadian government has committed to funding the national development of responsible AI, putting over $124 million through the Canada First Research Excellence Fund into the Université de Montréal in June 2023.

Several leading artificial intelligence businesses operating in the nation include Cohere, an enterprise LLM supplier, Scale AI, a generative AI platform, and Coveo, an AI search provider.

Compliance Overview:

Importance of Regulatory Frameworks in AI:

The dynamic landscape of artificial intelligence (AI) necessitates robust regulatory frameworks to address ethical and compliance challenges. Despite AI's potential for productivity gains and transformative impacts, concerns such as deepfake proliferation highlight the urgency for comprehensive regulations.

Diverse Global Approaches to AI Regulation:

Nations worldwide are responding to the need for AI regulation with varied strategies. The European Union leads with its pioneering AI Act, which categorizes AI systems by risk and mandates strict compliance measures. In contrast, the United States adopts a decentralized approach, with states like New York and Illinois enacting localized legislation, while federal initiatives like the National AI Commission Act aim to establish unified policies. China emphasizes strict controls for national security, requiring transparent labeling of AI-generated content. Meanwhile, Australia and Canada focus on developing ethical frameworks to guide responsible AI development.

European Union's Leading Role:

The European Union's AI Act sets a benchmark for comprehensive AI regulation by categorizing AI systems based on risk levels and imposing stringent compliance measures. This approach aims to balance AI's potential benefits with regulatory oversight to mitigate risks and protect societal interests.

Global Commitment to Responsible AI Development:

The multifaceted responses from nations underscore a global commitment to balancing AI's potential benefits with regulatory compliance. Whether through centralized legislation, decentralized approaches, or emphasis on ethical frameworks, countries recognize the importance of addressing AI's ethical and compliance challenges to ensure responsible development and safeguard societal interests

Conclusion

The journey towards comprehensive AI regulation is complex and ongoing. As countries around the world grapple with the nuances of AI technologies, the need for robust, informed, and flexible regulatory frameworks is clear. Race of Achieving with Artificial Intelligence among countries has a clear impact as to how much the particular country is investing in on the development of this technology, countries all over the globe have a continuous growth in rate of invest and this growth of significant contribution is not stopping by. By learning from each other and striving for a balanced approach, the global community can harness the benefits of AI while safeguarding against its risks, ensuring a future where technology works for everyone.

Disclaimer

The information provided in this article is intended for general informational purposes only and should not be construed as legal advice. The content of this article is not intended to create and receipt of it does not constitute any relationship. Readers should not act upon this information without seeking professional legal counsel.